Prompting

Think of LLMs as a smart, new employee (with amnesia) who needs explicit instructions. Here are some best practices:

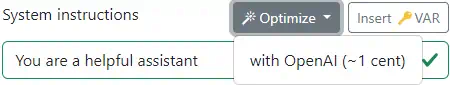

Use prompt optimizers

They rewrite your prompt to improve it. The Playground has one. Select the Optimize button.

Be clear, direct, and detailed

Be explicit and thorough. Include all necessary context, goals, and details so the model understands the full picture.

- BAD: Explain gravitation lensing. (Reason: Vague and lacks context or detail.)

- GOOD: Explain the concept of gravitational lensing to a high school student who understands basic physics, including how it’s observed and its significance in astronomy. (Reason: Specifies the audience, scope, and focus.)

When you ask a question, don’t just mumble. Spell it out. Give every detail the listener needs. The clearer you are, the better the answer you’ll get. For example, don't just say, Explain Gravitation Lensing. Say, Explain the concept of gravitational lensing to a high school student who understands basic physics, including how it’s observed and its significance in astronomy.

Give examples

Provide 2-3 relevant examples to guide the model on the style, structure, or format you expect. This helps the model produce outputs consistent with your desired style.

BAD: Explain how to tie a bow tie. (Reason: No examples or reference points given.)

GOOD: Explain how to tie a bow tie. For example:

- To tie a shoelace, you cross the laces and pull them tight...

- To tie a necktie, you place it around the collar and loop it through...

Now, apply a similar step-by-step style to describe how to tie a bow tie. (Reason: Provides clear examples and a pattern to follow.)

Give examples to the model. If you want someone to build a house, show them a sketch. Don’t just say ‘build something.’ Giving examples is like showing a pattern. It makes everything easier to follow.

Think step by step

Instruct the model to reason through the problem step by step. This leads to more logical, well-structured answers.

- BAD: Given this transcript, is the customer satisfied? (Reason: No prompt for structured reasoning.)

- GOOD: Given this transcript, is the customer satisfied? Think step by step. (Reason: Directly instructs the model to break down reasoning into steps.)

Ask the model to think step by step. Don’t ask the model to just give the final answer right away. That's like asking someone to answer with the first thing that pops into their head. Instead, ask them to break down their thought process into simple moves — like showing each rung of a ladder as they climb. For example, when thinking step by step, the model could, A, list each customer question, B, find out if it was addressed, and C, decide that the agent answered only 2 out of the 3 questions.

Assign a role

Specify a role or persona for the model. This provides context and helps tailor the response style.

- BAD: Explain how to fix a software bug. (Reason: No role or perspective given.)

- GOOD: You are a seasoned software engineer. Explain how to fix a software bug in legacy code, including the debugging and testing process. (Reason: Assigns a clear, knowledgeable persona, guiding the style and depth.)

Tell the model who they are. Maybe they’re a seasoned software engineer fixing a legacy bug, or an experienced copy editor revising a publication. By clearly telling the model who they are, you help them speak with just the right expertise and style. Suddenly, your explanation sounds like it’s coming from a true specialist, not a random voice.

Use XML to structure your prompt

Use XML tags to separate different parts of the prompt clearly. This helps the model understand structure and requirements.

- BAD: Here’s what I want: Provide a summary and then an example. (Reason: Unstructured, no clear separation of tasks.)

- GOOD:

<instructions> Provide a summary of the concept of machine learning. </instructions> <example> Then provide a simple, concrete example of a machine learning application (e.g., an email spam filter). </example>

Think of your request like a box. XML tags are neat little labels on that box. They help keep parts sorted, so nothing gets lost in the shuffle.

Use Markdown to format your output

Encourage the model to use Markdown for headings, lists, code blocks, and other formatting features to produce structured, easily readable answers.

- BAD: Give me the steps in plain text. (Reason: No specific formatting instructions, less readable.)

- GOOD: Provide the steps in a markdown-formatted list with ### headings for each section and numbered bullet points. (Reason: Directly instructs to use Markdown formatting, making output more structured and clear.)

- BAD: Correct the spelling and show the corrections. (Reason: No specific formatting instructions)

- GOOD: Correct the spelling, showing <ins>additions</ins> and <del>deletions</del>. (Reason: Directly instructs to use HTML formatting, making output more structured and clear.)

Markdown is a simple formatting language that all models understand. You can have them add neat headings, sections, bold highlights, and bullet points. These make complex documents more scannable and easy on the eyes.

Use JSON for machine-readable output

When you need structured data, ask for a JSON-formatted response. This ensures the output is machine-readable and organized.

- BAD: Just list the items. (Reason: Unstructured plain text makes parsing harder.)

- GOOD: Provide the list of items as a JSON array, with each item as an object containing

nameanddescriptionfields. (Reason: Instructing JSON format ensures structured, machine-readable output.)

Note: Always use JSON schema if possible.

Imagine you’re organizing data for a big project. Plain text is like dumping everything into one messy pile — it’s hard to find what you need later. JSON, on the other hand, is like packing your data into neat, labeled boxes within boxes. Everything has its place: fields like ‘name,’ ‘description,’ and ‘value’ make the data easy to read, especially for machines. For example, instead of just listing items, you can structure them as a JSON array, with each item as an object. That way, when you hand it to a program, it’s all clear and ready to use.

Prefer Yes/No answers

Convert rating or percentage questions into Yes/No queries. LLMs handle binary choices better than numeric scales.

- BAD: On a scale of 1-10, how confident are you that this method works? (Reason: Asks for a numeric rating, which can be imprecise.)

- GOOD: Is this method likely to work as intended? Please give a reasoning and then answer Yes or No. (Reason: A binary question simplifies the response and clarifies what’s being asked.)

Don’t ask ‘On a scale of one to five...’. Models are not good with numbers. They don't know the difference between a 2 versus a 3 score. ‘Yes or no?’ is simple. It’s clear. It’s quick. So, break your question into simple parts that they can answer with just a yes or a no.

Ask for reason first, then the answer

Instruct the model to provide its reasoning steps before stating the final answer. This makes it less likely to justify itself and more likely to think deeper, leading to more accurate results.

- BAD: What is the best route to take? (Reason: Direct question without prompting reasoning steps first.)

- GOOD: First, explain your reasoning step by step for how you determine the best route. Then, after you’ve reasoned it out, state your final recommendation for the best route. (Reason: Forces the model to show its reasoning process before giving the final answer.)

BEFORE making its final choice, have the model talk through their thinking. Reasoning first, answer second. That way, the model won't be tempted to justify an answer that they gave impulsively. It is also more likely to think deeper.

Use proper spelling and grammar

A well-written, grammatically correct prompt clarifies expectations. Poorly structured prompts can confuse the model.

- BAD: xplin wht the weirless netork do? make shur to giv me a anser?? (Reason: Poor spelling and unclear instructions.)

- GOOD: Explain what a wireless network does. Please provide a detailed, step-by-step explanation. (Reason: Proper spelling and clarity lead to a more coherent response.)

If your question sounds like gibberish, expect a messy answer. Speak cleanly. When you do, the response will be much clearer.

Example prompts

- Get Alt Text for an image. Use an image model, and say

Describe the image for a blind person. Follow the WCAG guidelines for alt-text. - Compare 2 images. Upload 2 images and say,

Compare these 2 similar images. Describe both images in detail, including every word used. Then explain the difference between the two visuals (including every word) to a blind person in a structured way.